[Abstract]

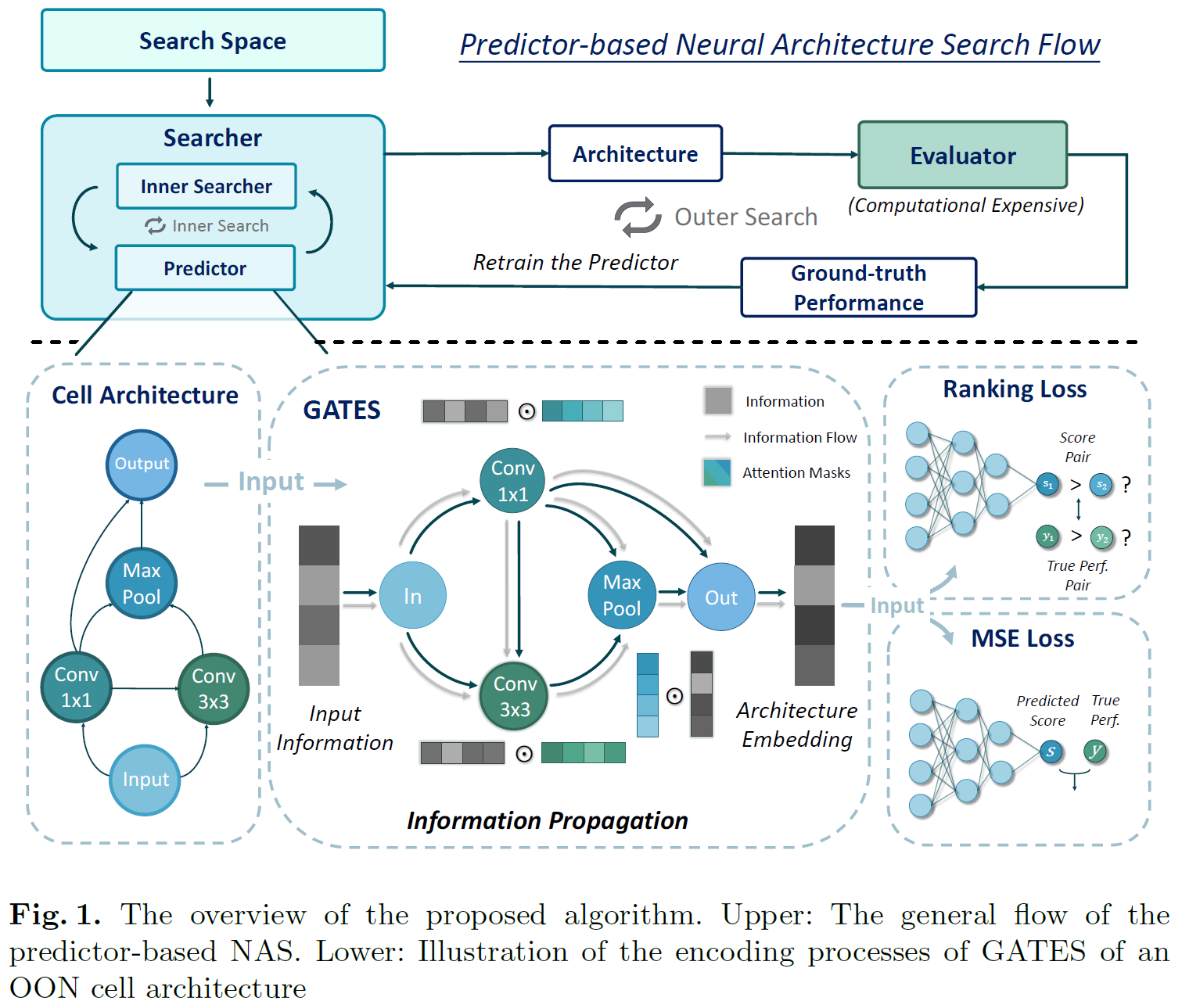

- novel Graph-based neural Architecture Encoding Schems (GATES)

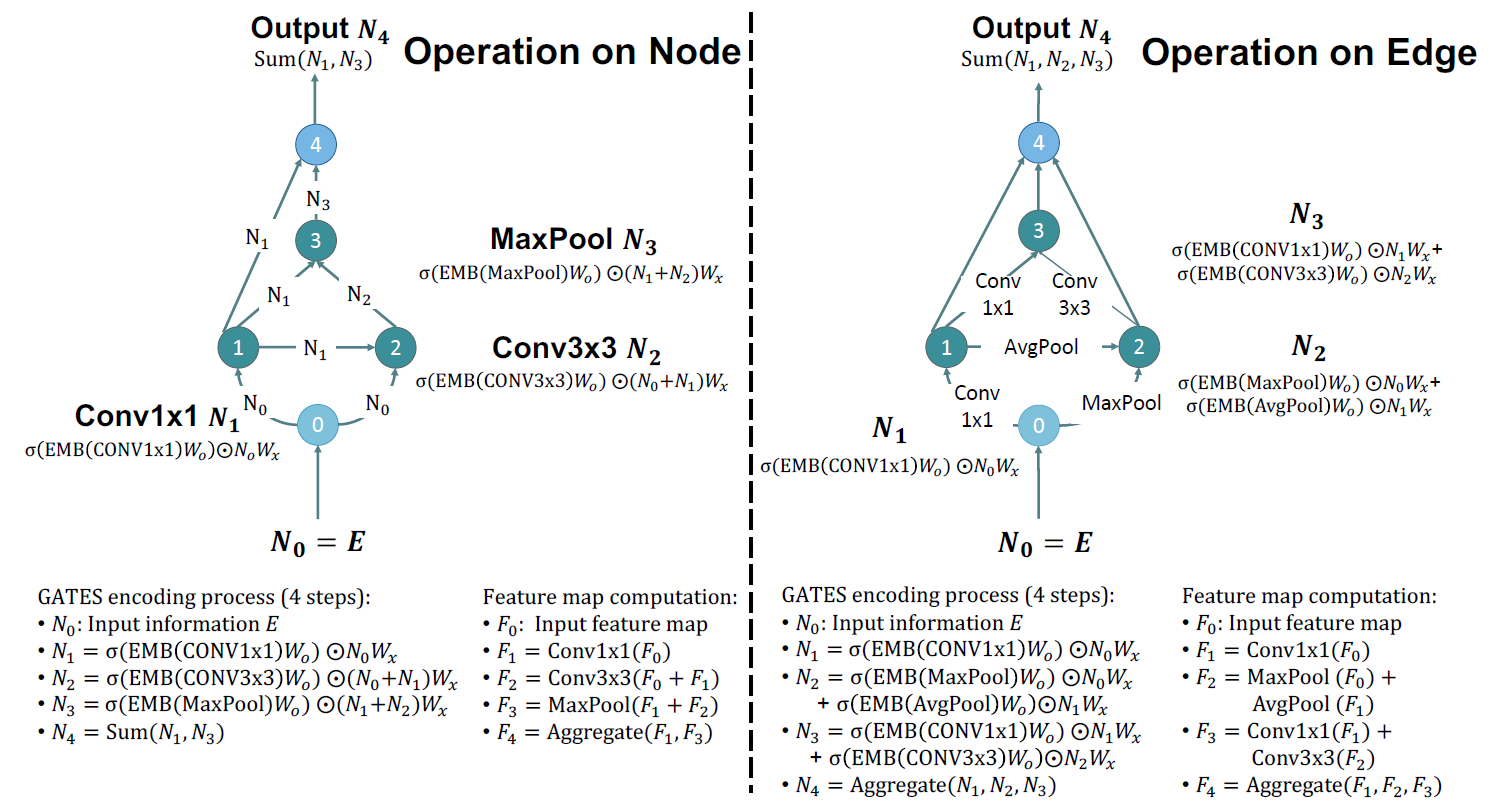

- can encode “operation on node” and “operation on edge” consistently

[1. Introduction]

- two key components in a NAS framework

- architecture searching module

- architecture evaluation module

- training every candidate architecture until convergence (thousands of architectures)

- computational burden is large

⇒ directions

1) evaluation: accelerate the evaluation of each architeucture (w.r.t. ranking correlation)

2) searching: increasing sample efficiency so that fewer architectures are needed to evaluated

- solution: learn approximated performance predictor → utilize the predictor to sample potentially good architectures

- performance predictor predicts performance based on encodings

- sequence-based scheme

- graph-based scheme: GCN

- for encoding operations, it is more natural to encoding them as transforms of node attrbutes (mimic the processing of the information) than just node attributes

- introduce GATES

- models infornation as the attributes of input nodes

- data processing of the operations are modeled by GATES as different transforms

⇒ embeddings of isomorphic architectures are the same

[2. Related Work]

[2.1 Architeture Evaluation Module]

- parameter sharing: construct supernet → one-shot

- effective, but not accurate and not generally applicable

[2.2 Architecture Searching Module]

- RL based, Evolutionary methods, gradient-based method, MCTS method

- utilize a performance predictor to samplem new architectures

- NASBot, NAO

[2.3 Neural Architecture Encoders]

- sequence-basd : lose of information

- graph-based: hard to encode OOE (concatenation cannot be generalized)

[3. Method]

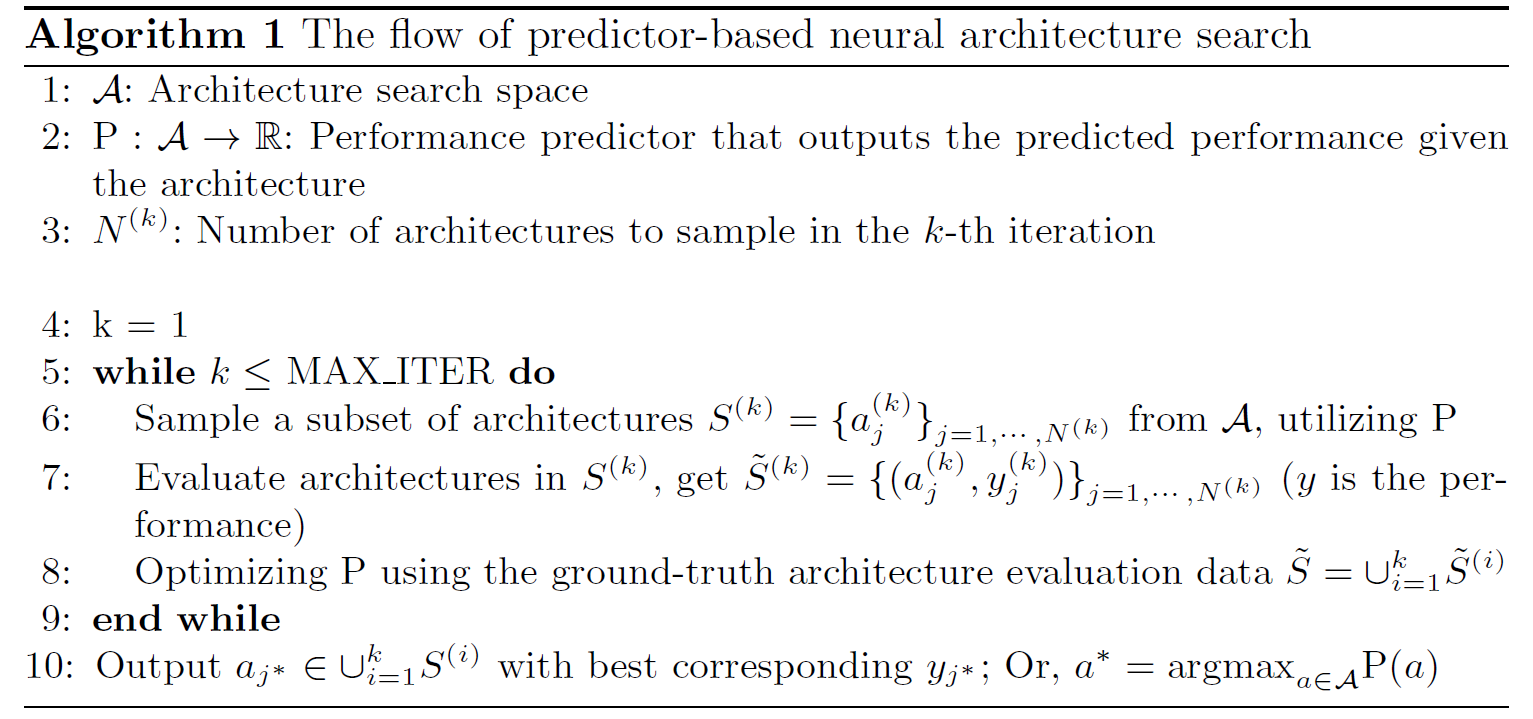

[3.1 Predictor-Based Neural Architecture Search]

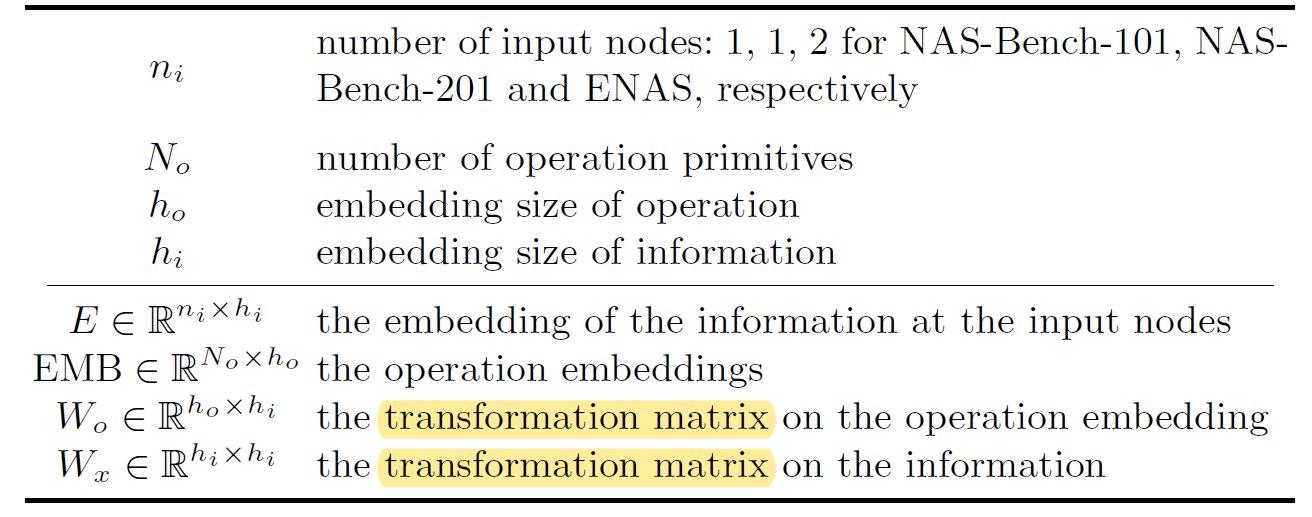

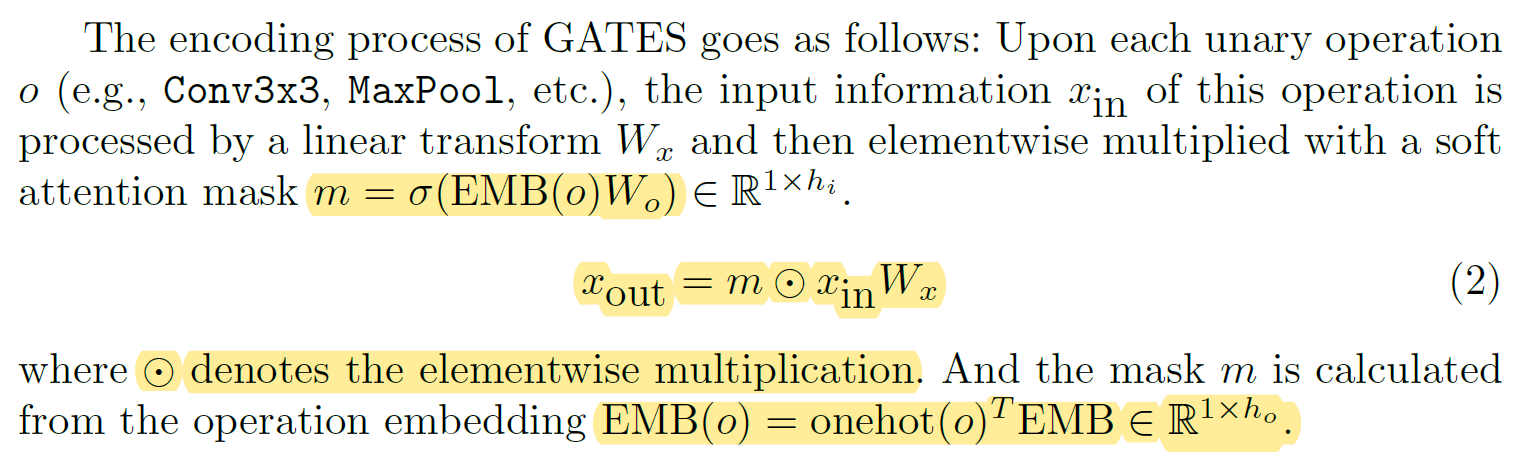

[3.2 GATES: A Generic Neural Architecture Encoder]

$\hat{s} = P(a)=MLP(Enc(a))$

- difference between GATES and GCN

- GATES models operations as the processing of the node attributes

- GCN models them as the node attributes themselves

-

representational power

1) reasonable modeling of DAGs

2) intrinsic power handling of DAG isomorphism

[3.3 Neural Architecture Search Utilizing the Predictor]

- random sample n architectures → select the best k

- search with EA for n steps → select the best k

[4. Experiments]

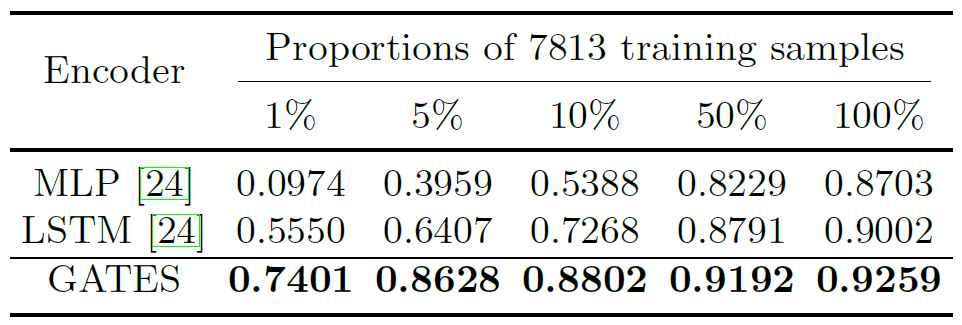

[4.2 Predictor Evaluation on NAS-Bench-201]

- 15625 architectures in OOE search space

- the first 50%: training data

-

remaning: test data